Recently I met an issue that one of our APIs of the online production servers is very slow due to redis scan.

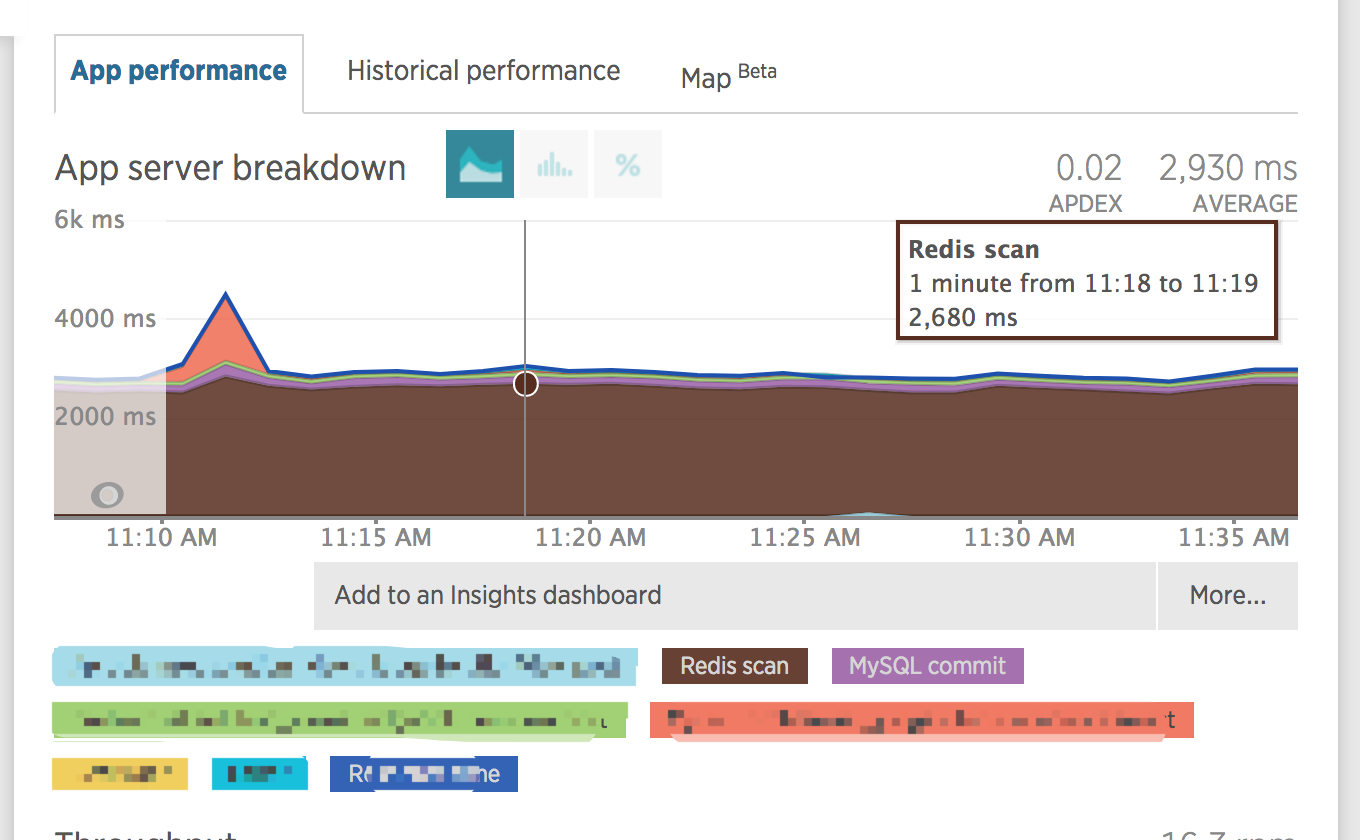

From next graph generated by NewRelic, we found AVERAGE response time is 2930ms while redis scan could take 2680ms, which is 2680/2930 = 91.4% portion of the total time.

Why redis scan cost this much? From our local environment, we never noticed such huge performance issue.

1. Locate the culprit

1.1. Phenomenon

After that I dug into the source code and found next code snippet in our Django Application and this is the only code of this API to access redis, so this must be the culprit of this issue.

1 | from django.core.cache import cache |

From the above code, we could know that redis is treated as a cache server here.

So what does delete_pattern do on the earth? So I traced the code and in the end found next delete_pattern definition from django_redis.client.default.DefaultClient#delete_pattern.

1 | def delete_pattern(self, pattern, version=None, prefix=None, client=None): |

The code calls client.scan_iter(pattern) to get next cursor and try to delete that key of the cursor.

1.2. Redis scan

From the official site of redis scan, we can combine MATCH and COUNT in the SCAN command.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33redis 127.0.0.1:6379> scan 0 MATCH *11*

1) "288"

2) 1) "key:911"

redis 127.0.0.1:6379> scan 288 MATCH *11*

1) "224"

2) (empty list or set)

redis 127.0.0.1:6379> scan 224 MATCH *11*

1) "80"

2) (empty list or set)

redis 127.0.0.1:6379> scan 80 MATCH *11*

1) "176"

2) (empty list or set)

redis 127.0.0.1:6379> scan 176 MATCH *11* COUNT 1000

1) "0"

2) 1) "key:611"

2) "key:711"

3) "key:118"

4) "key:117"

5) "key:311"

6) "key:112"

7) "key:111"

8) "key:110"

9) "key:113"

10) "key:211"

11) "key:411"

12) "key:115"

13) "key:116"

14) "key:114"

15) "key:119"

16) "key:811"

17) "key:511"

18) "key:11"

redis 127.0.0.1:6379>

The above command returned me with a 0 means, in the first line of response, there is no more data to scan. If not 0, the number is next cursor to be used to scan.

It is important to note that the MATCH filter is applied

afterelements are retrieved from the collection, just before returning data to the client.

This means, firstly it will retrieve the data, and then will use a filter to match the retrieved data.

Does this means there could be multiple back-and-forth between the client and server, and which will cost the huge latency of this redis scan? If that’s true, we just need an extra easy COUNT parameter.

2. Validation in local environment

2.1. KEYS number

How many keys are there in our online production redis server?1

2

3127.0.0.1:6379[10]> INFO

# more code is ommitted here

db10:keys=6986,expires=6986,avg_ttl=40118300

From above code, we can know there are nearly 7000 keys in our server.

2.2. Client-Server latency

I simply use the ping command to get the latency and it’s 3.5 ms. Due to classified cause, sensitive info is hidden.1

2

3

4

5

6

7[xxx@xxxx.xxx.xxx.xxx ~]$ ping xxxx.com

PING xxxx.com (xx.xx.xx.xx) 56(84) bytes of data.

64 bytes from xxxx.com (xx.xx.xx.xx): icmp_seq=1 ttl=58 time=3.58 ms

64 bytes from xxxx.com (xx.xx.xx.xx): icmp_seq=2 ttl=58 time=3.46 ms

--- xxxx.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 3004ms

rtt min/avg/max/mdev = 3.461/3.498/3.585/0.050 ms

2.3. Simulate the Network Latency Locally

From this post, I successfully simulated the network latency.1

2

3

4

5

6

7(venv) vagrant@localhost:~ $ sudo tc qdisc add dev lo root netem delay 2ms

(venv) vagrant@localhost:~ $ ping 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=4.34 ms

--- 127.0.0.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 4.348/4.348/4.348/0.000 ms

So I made the lo NIC as slow as around 4.3 ms.

Other commands to be used for tc qdisc:

baisc syntax: tc qdisc add/del dev lo/eth0/eth1 root netem [delay 2ms]1

2

3

4

5

6

7

8# add rule to lo NIC

tc qdisc add dev lo root netem delay 2ms

# add rule to eth0 NIC

tc qdisc add dev eth0 root netem delay 2ms

# test

tc -s qdisc

# delete rule

tc qdisc del dev eth0 root netem

2.4. Measure the time of cleaning the cache before and after setting the delay

Since this is a Django application, so I can use Django Shell to try to execute the clean cache statement, which as a result operate the local redis server.1

2

3

4

5(venv) vagrant@localhost:~ $ time python manage.py shell -c 'from django.core.cache import cache; cache.delete_pattern("view_cache:dispatch:123456789:category_slug:all")'

real 0m8.404s

user 0m0.944s

sys 0m0.696s

What if we don’t set the delay option, what’s would the timing metric be?1

2

3

4

5(venv) vagrant@lcoalhost:~ $ time python manage.py shell -c 'from django.core.cache import cache; cache.delete("view_cache:dispatch:123456789:category_slug:all")'

real 0m4.366s

user 0m0.800s

sys 0m0.648s

From the above test, we can see that before and after will make a huge difference as large as 4 seconds !!! And this is under the network latency of 4.3 ms. If the network latency is around 3.5 ms, the total time of cleaning cache could be as large as 3 seconds, which is very close the above phenomenon!

Now, we can get a conclusion: network latency will influence too much on the redis scan performance. SCAN will call the redis multiple times back-and-forth, which in the end, cause a large redis scan time.

How to solve the problem? Use the COUNT parameter!

3. Solve the problem

3.1. Estimation of deleting time again

From the definition of delete_pattern method, we can see it just scan_iter the redis using the default COUNT which is 10. Let’s give an estimation here:

- The

Redis Scanuse a sequential scan withCOUNTas 10, there will be 6986/10 = 700 times to scan. - Each scan costs at least 1 ping time, which is 3.5 ms.

- In total, 3.5*700 = 2450 ms, which is close to the 2680 ms of the phenomenon above. Interesting.

From above estimation, we are more confident of our guess now, and using the COUNT must be the solution!

But the delete_pattern method provided by the default client doesn’t use COUNT parameter, so what I can do is to create a new Redis Client which is inherited from the default Client and override this delete_pattern method to use the COUNT parameter.

Here is my code:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33import socket

# Compatibility with redis-py 2.10.x+

from redis.exceptions import ConnectionError

from redis.exceptions import ResponseError

from django_redis.exceptions import ConnectionInterrupted, CompressorError

from django_redis.client import DefaultClient

try:

from redis.exceptions import TimeoutError, ResponseError

_main_exceptions = (TimeoutError, ResponseError, ConnectionError, socket.timeout)

except ImportError:

_main_exceptions = (ConnectionError, socket.timeout)

class CacheClient(DefaultClient):

def delete_pattern(self, pattern, itersize=None, version=None, prefix=None, client=None):

"""

Remove all keys matching pattern.

"""

if client is None:

client = self.get_client(write=True)

pattern = self.make_key(pattern, version=version, prefix=prefix)

try:

count = 0

for key in client.scan_iter(pattern, count=itersize): // have a itersize here

client.delete(key)

count += 1

return count

except _main_exceptions as e:

raise ConnectionInterrupted(connection=client, parent=e)

When I call the code, I make sure to pass the itersize very large, say, 10,000 to eliminate too many back-and-forth RPC calls.

3.2. Enable the customized redis client class

Of course we need to enable this client in our settings/local.py1

2

3

4

5

6

7

8

9

10

11

12CACHES = {

'default': {

'BACKEND': 'django_redis.cache.RedisCache',

# redis server

'LOCATION':

os.environ.get('NOJ_REDIS_CACHE_URL', 'redis://localhost:6379/10'),

'OPTIONS': {

'PARSER_CLASS': 'redis.connection.HiredisParser',

'CLIENT_CLASS': 'common.cache_client.CacheClient' // enable the customized redis client class

}

}

}

3.3. Test after fixing

I added a 10000 after my delete_pattern call.1

2

3

4

5(venv) vagrant@localhost:~ $ time python manage.py shell -c 'from django.core.cache import cache; cache.delete_pattern("view_cache:dispatch:123456789:category_slug:all",10000)'

real 0m4.021s

user 0m0.784s

sys 0m0.612s

The time now is very close to the time before setting the network latency, which means, delete_pattern won’t be a performance issue anymore, since it only has one RPC call now.

Cheers, bro!

4. Conclusion

- Don’t be afraid of the library source code, just dig into it.

- Use

tccommand to simulate the network latency. MATCHinRedis Scanis to filter on top of the retrieved data.- Default

COUNTofRedis Scanis 10. - Use

python manage.py shellto execute ad-hoc test. - Use

pingto get network latency. - Use

INFOto get redis statistical information.

References