Just a post about what we need to setup the ElasticSearch/Fluentd/Kibana. For detailed and how do they work, please reference the official site.

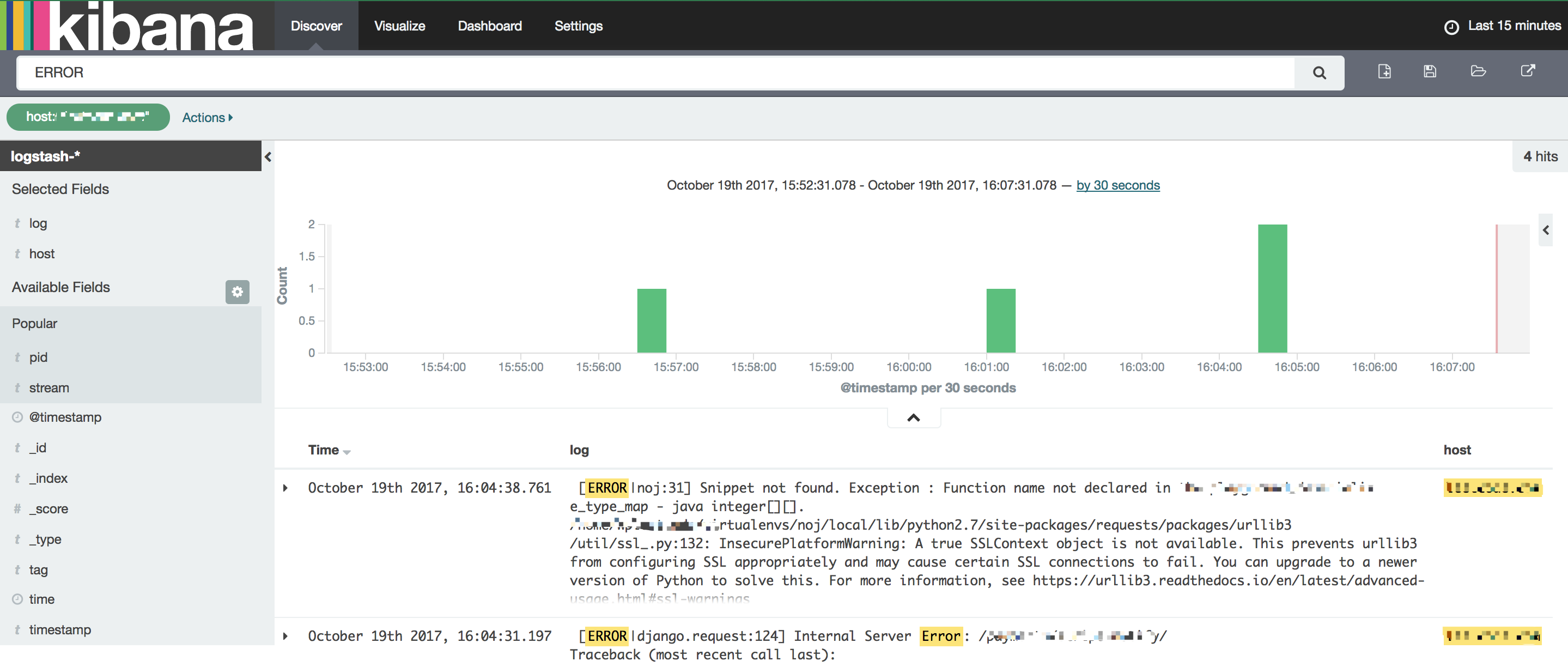

Here is my final Kibana screenshot:

Beautiful, isn’t it?

This post will setup EFK tool chain in Kubernets.

1. Setup ElasticSearch in Kubernets

1.1. Setup ElasticSearch ReplicationController

Here is ElasticSearch ReplicationController yaml, please note the volume is using hostPath because I’m using nodeSelector to deploy the ElasticSearch, which makes it sticky to the specific logging dedicated node.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51apiVersion: v1

kind: ReplicationController

metadata:

name: elasticsearch-logging-v1

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

replicas: 2

selector:

k8s-app: elasticsearch-logging

version: v1

template:

metadata:

labels:

k8s-app: elasticsearch-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

nodeSelector:

logging: "true"

containers:

- image: gcr.io/google_containers/elasticsearch:v2.4.1

name: elasticsearch-logging

resources:

# need more cpu upon initialization, therefore burstable class

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: "ES_MAX_MEM"

value: "1500m"

- name: "ES_MIN_MEM"

value: "500m"

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: es-persistent-storage

mountPath: /data

volumes:

- name: es-persistent-storage

hostPath:

path: /mnt/volume-sfo2-03/logging_data

1.2. Setup ElasticSearch Service

It’s the plain service yaml file in Kubernetes.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "Elasticsearch"

spec:

type: NodePort

ports:

- port: 9200

protocol: TCP

targetPort: db

nodePort: 31001

selector:

k8s-app: elasticsearch-logging

1.3. Accessing ElasticSearch Request

Just for test, use curl command to issue a request:1

2

3

4

5

6

7

8

9

10

11

12

13

14[root@kubernetes-master logging]# curl 127.0.0.1:31001

{

"name" : "Ogress",

"cluster_name" : "kubernetes-logging",

"cluster_uuid" : "fOjPK7t2TqeR-GlhqGhzag",

"version" : {

"number" : "2.4.1",

"build_hash" : "c67dc32e24162035d18d6fe1e952c4cbcbe79d16",

"build_timestamp" : "2016-09-27T18:57:55Z",

"build_snapshot" : false,

"lucene_version" : "5.5.2"

},

"tagline" : "You Know, for Search"

}

2. Setup Kibana in Kubernets

2.1. Setup Kibana Deployment

Here is Kibana Deployment yaml file, we also make it sticky to logging node with nodeSelector. Make sure KIBANA_BASE_URL environment value is set to emtpy if you’re going to use NodePort to access Kibana.

Also, Kibana need to talk to ElasticSearch by using http://elasticsearch-logging:9200, so please make sure kube-dns works correctly before setting up the Kibana Service.

I will write another post about how kube-dns works.

1 | apiVersion: extensions/v1beta1 |

2.2. Setup Kibana Service

1 | apiVersion: v1 |

2.3. Accessing Kibana Service

Just for test, use curl command to issue a request:1

2

3

4

5

6

7

8

9

10[root@kubernetes-master logging]# curl 127.0.0.1:31000

<script>var hashRoute = '/app/kibana';

var defaultRoute = '/app/kibana';

var hash = window.location.hash;

if (hash.length) {

window.location = hashRoute + hash;

} else {

window.location = defaultRoute;

}</script>

3. Setup Fluentd

This is the MOST complicated part.

Before setting up fluentd, we need to test fluentd.conf locally. You can setup fluentd in a VM to test.

3.1. Install Fluentd Locally or in a VM

Follow Installation Notes here.

Please make sure you install the latest version, which is v0.12.40.

If you can’t get version of v0.12.40 after your installation, please use embeded gem to install. Follow this post.

Here is what I do have locally:1

2

3

4

5

6

7

8

9

10

11

12

13

14morganwu@localhost:~$ /opt/td-agent/embedded/bin/gem list fluentd

*** LOCAL GEMS ***

fluentd (0.12.40)

fluentd-ui (0.4.4)

# or we can use `-v "~> 0.12.0"` to install 0.12.xx latest version

morganwu@localhost:~$ /opt/td-agent/embedded/bin/gem install fluentd -v "~> 0.12.0"

Fetching: fluentd-0.12.43.gem (100%)

Successfully installed fluentd-0.12.43

Parsing documentation for fluentd-0.12.43

Installing ri documentation for fluentd-0.12.43

Done installing documentation for fluentd after 3 seconds

1 gem installed

3.2. Install Fluentd ElasticSearch Plugin

Use the embedded gem to install fluent-plugin-elasticsearch1

2

3

4

5sudo apt-get install build-essential

/opt/td-agent/embedded/bin/gem install fluent-plugin-elasticsearch -v 1.10.3

# update 2019-04-05, after this plugin installed, fluentd 1.4.2 will be installed as a dependency automatically

# even you are install plugin version using 1.10.2

# so we need to manually uninstall the new fluentd version or we need the new conf

3.3. Workout the fluentd.conf little by little

- Use a Ruby regular expression editor for testing the reqular expression.

- Use For a Good Strftime to test the time format.

- Use Fluetnd Documents carefully.

- Use

stdoutplugin to debug Fluentd conf.

3.4. Create ConfigMap in Kubernetes

Assume you do have a fluentd.conf now, let’s create one config map for fluentd to use.1

2

3

4[root@kubernetes-master logging]# kubectl -n kube-system create cm cm-fluentd-conf --from-file=td-agent.conf=./cm-fluentd-conf

[root@kubernetes-master logging]# kubectl -n kube-system get cm

NAME DATA AGE

cm-fluentd-conf 1 6d

3.5. Setup Fluentd DaemonSet

Please note, we are mounting the cm-fluentd-conf volume to /etc/fluent/config.d directory inside the container.

When we create the config map above, we specify td-agent.conf=./cm-fluentd-conf, which means inside the container, there will be a file td-agent.conf and it’s content comes from ./cm-fluentd-conf provided above to create the config map.

Please note, it has the permission to read every logs in the /var/log directory.

To make it as DaemonSet to make sure it’s running on each server node.

We should make sure the buffer_chunk_limit*buffer_chunk_size(buffer-plugin-overview) won’t exceed the limits of memory specified in the yaml file next.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: fluentd-es-v2.0.1

namespace: kube-system

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

version: v2.0.1

spec:

template:

metadata:

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

version: v2.0.1

spec:

containers:

- name: fluentd-es

image: gcr.io/google_containers/fluentd-elasticsearch:v2.0.1

env:

- name: FLUENTD_ARGS

value: --no-supervisor -q

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: cm-fluentd-conf

mountPath: /etc/fluent/config.d

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: cm-fluentd-conf

configMap:

name: cm-fluentd-conf

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

4. Overview the deployed components in Kubernetes

1 | [root@kubernetes-master logging]# kubectl -n kube-system get rc,svc,cm,po |